Minglu Zhao

Ph.D. Candidate

Department of Statistics

University of California, Los Angeles

Advisor: Ying Nian Wu and Tao Gao

Email: minglu.zhao@ucla.edu

Google Scholar LinkedIn GitHub

University of California, Los Angeles

Advisor: Ying Nian Wu and Tao Gao

Email: minglu.zhao@ucla.edu

Google Scholar LinkedIn GitHub

Bio

I am a fifth-year Ph.D. student at UCLA, advised by Ying Nian Wu and Tao Gao. My research explores the intersections of language modeling, decision-making, representation learning, and human cognition, with a focus on developing generative models and latent variable approaches that enhance language understanding and improve decision-making processes in complex environments.

I obtained my B.S. in Statistics and B.S. in Cognitive Science also at UCLA. Go Bruins!! 🐻

News

- [09/2025] Our paper, Place Cells as Multi-Scale Position Embeddings: Random Walk Transition Kernels for Path Planning🧠🧭, is accepted by NeurIPS 2025!

- [08/2025] Our robotics paper titled Latent Adaptive Planner for Dynamic Manipulation 🤖📚 got accepted by CoRL 2025!

- [06/2025] ☀️ I will be joining Meta as a Research Scientist Intern this summer. Catch me for a coffee in Bay Area 😊!

- [05/2025] Our recent work on Latent-Thought Language Models (LTMs) with Variational Bayes Inference-Time Computation💬 is accepted by ICML 2025!

- [04/2025] Our paper on representation modeling for head direction system is accepted by CogSci 2025 🧠!

- [02/2025] I started as a part-time Research Scientist Consultant at Natera, Inc. focusing on pre-training multi-modality foundation models using genomics data 🧬!

- [01/2025] Our paper on Multi-agent RL with Theory-of-mind-based cooperation modeling is accepted by ICLR 2025!

- [10/2024] Our paper on representation modeling for head direction system is accepted by the workshop on Symmetry and Geometry in Neural Representations (NeurReps) at NeurIPS 2024!

- [10/2024] Our paper on Multi-agent RL with Theory-of-mind-based cooperation modeling is accepted by the workshop on Open-World Agents at NeurIPS 2024!

- [09/2024] Our paper Latent Plan Transformer, is accepted by NeurIPS 2024!

- [06/2023] I will be joining GE Global Research as a research scientist intern this summer

Selected Publications

* denotes equal contribution.

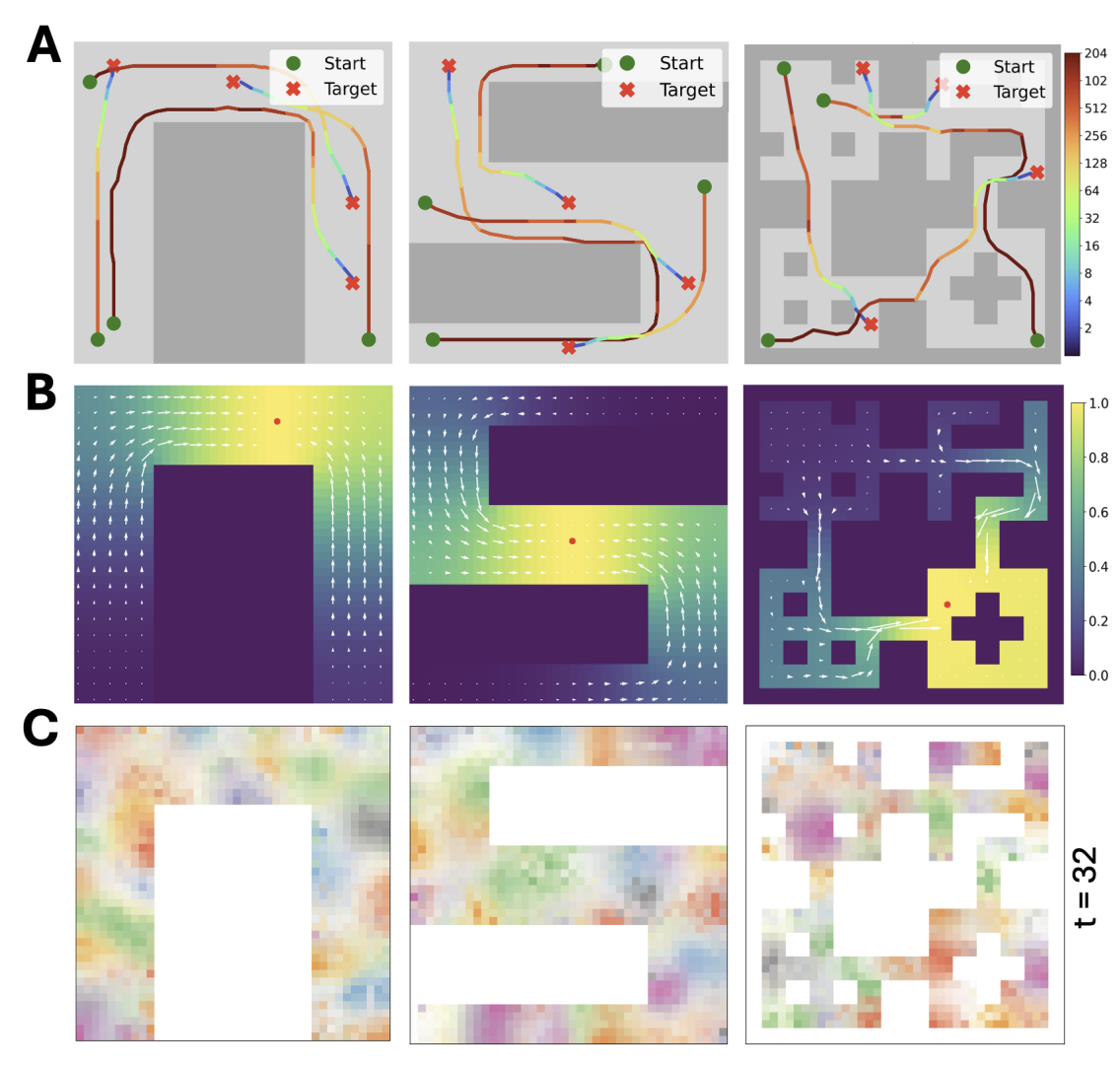

Place Cells as Multi-Scale Position Embeddings: Random Walk Transition Kernels for Path Planning

Minglu Zhao*, Dehong Xu*, Deqian Kong*, Wen-Hao Zhang, Ying Nian Wu

Minglu Zhao*, Dehong Xu*, Deqian Kong*, Wen-Hao Zhang, Ying Nian Wu

We propose a novel framework for modeling hippocampal place cells as proximity-preserving neural embeddings that encode multi-scale random walk transitions.

Our experiments demonstrate localized place fields, multi-scale tuning, and adaptability to environmental changes,

offering a biologically plausible model that unifies spatial and temporal coding, with potential extensions to theta-phase

precession and grid cell integration.

Cite Place Cells as Position Embeddings of Multi-Time Random Walk Transition Kernels for Path Planning

@article{zhao2025place,

title={Place Cells as Position Embeddings of Multi-Time Random Walk Transition Kernels for Path Planning},

author={Zhao, Minglu and Xu, Dehong and Kong, Deqian and Zhang, Wen-Hao and Wu, Ying Nian},

journal={Advances in Neural Information Processing Systems},

year={2025}

}

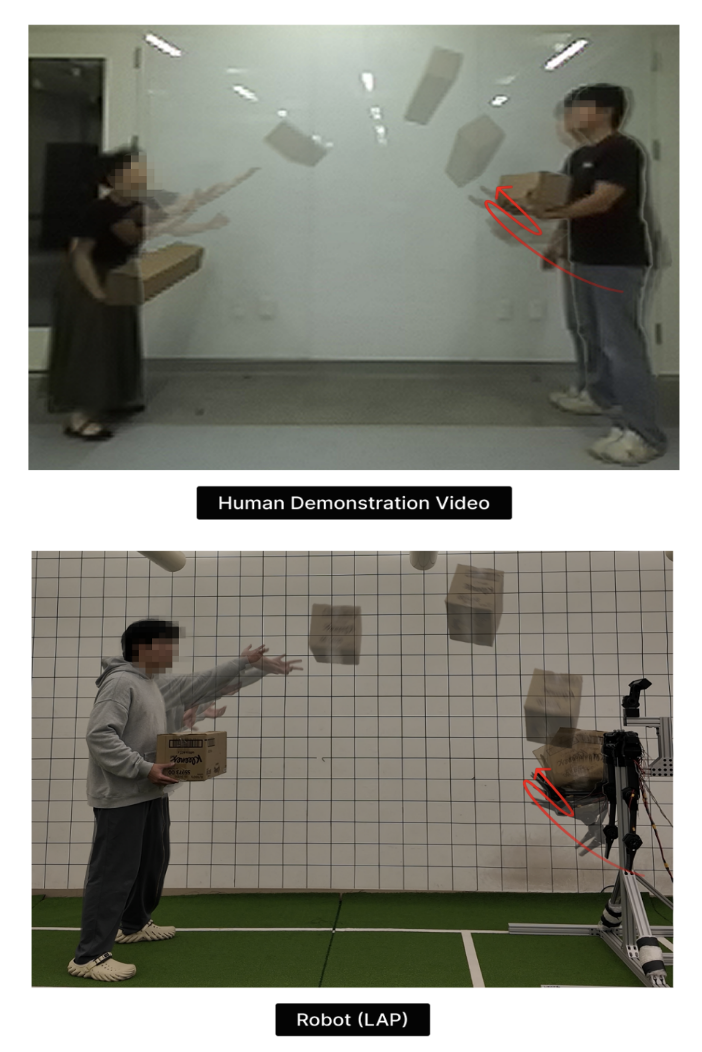

Latent Adaptive Planner for Dynamic Manipulation

Donghun Noh*, Deqian Kong*, Minglu Zhao, Andrew Lizarraga, Jianwen Xie, Ying Nian Wu

Donghun Noh*, Deqian Kong*, Minglu Zhao, Andrew Lizarraga, Jianwen Xie, Ying Nian Wu

We present the Latent Adaptive Planner (LAP), a trajectory-level

latent-variable policy for dynamic nonprehensile manipulation (e.g., box catching) that formulates planning as inference in a low-dimensional latent space and

is learned effectively from human demonstration videos. Through challenging box catching experiments with varying object properties,

LAP demonstrates superior success rates, trajectory smoothness, and energy efficiency by learning human-like compliant

motions and adaptive behaviors.

Cite Latent Adaptive Planner for Dynamic Manipulation

@inproceedings{noh2025latent,

title={Latent Adaptive Planner for Dynamic Manipulation},

author={Noh, Donghun and Kong, Deqian and Zhao, Minglu and Lizarraga, Andrew and Xie, Jianwen and Wu, Ying Nian and Hong, Dennis},

journal={Conference on Robot Learning},

year={2025}

}

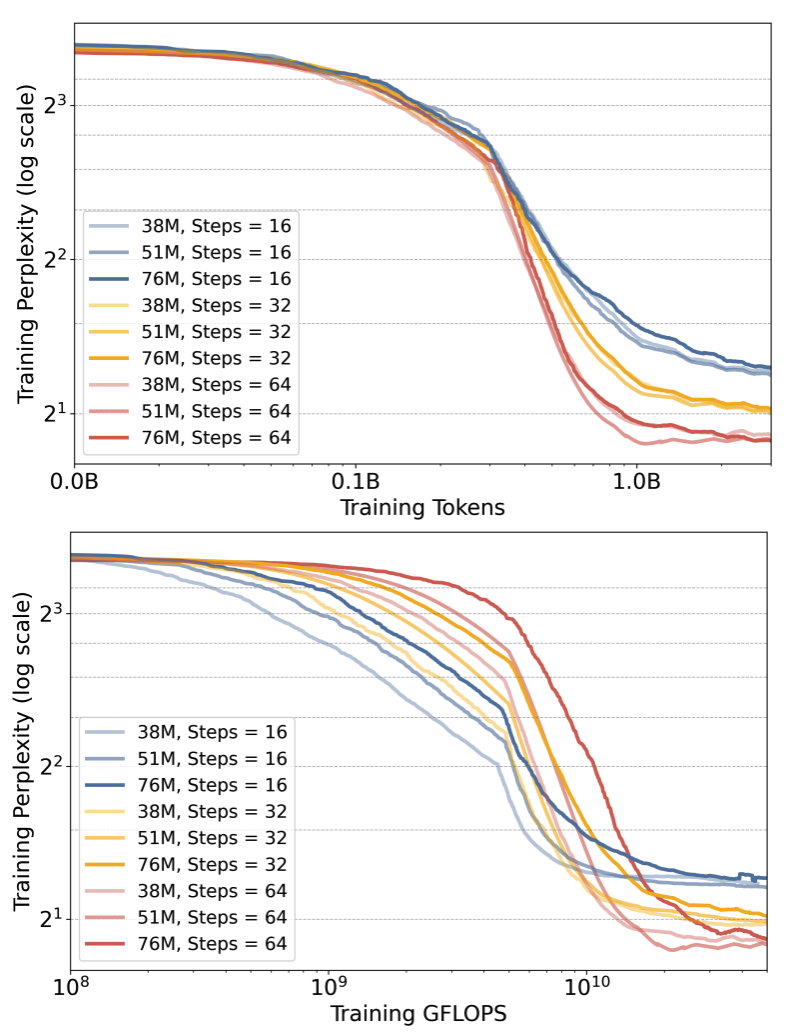

Latent Thought Models with Variational Bayes Inference-Time Computation

Deqian Kong*, Minglu Zhao*, Dehong Xu*, Bo Pang, Shu Wang, Edouardo Honig, Zhangzhang Si, Chuan Li, Jianwen Xie, Sirui Xie, Ying Nian Wu

We introduce Latent-Thought Language Models (LTMs), a novel language model family that incorporates explicit latent thought vectors.

LTMs leverage dual-rate optimization, rapidly updating local latent vectors while gradually refining global decoder parameters.

This approach unlocks new scaling dimensions, achieving superior efficiency, perplexity, and zero-shot performance over traditional models. They also exhibit emergent few-shot reasoning, highlighting their potential for advanced language tasks.

Cite Latent Thought Models with Variational Bayes Inference-Time Computation

@article{kong2025latent,

title={Latent Thought Models with Variational Bayes Inference-Time Computation},

author={Kong, Deqian and Zhao, Minglu and Xu, Dehong and Pang, Bo and Wang, Shu and Honig, Edouardo and Si, Zhangzhang and Li, Chuan and Xie, Jianwen and Xie, Sirui and Wu, Ying Nian},

journal={International Conference on Machine Learning},

year={2025}

}

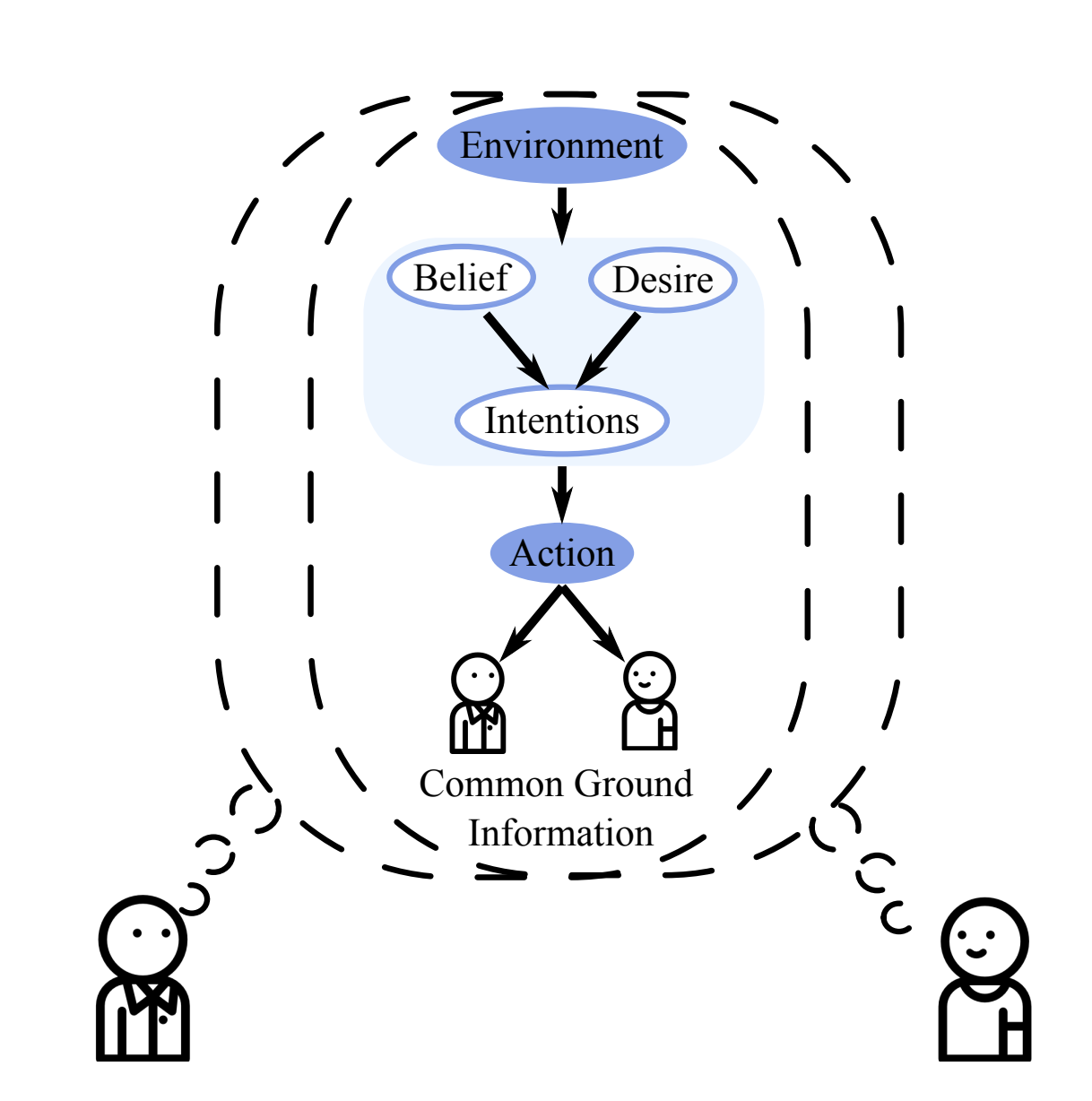

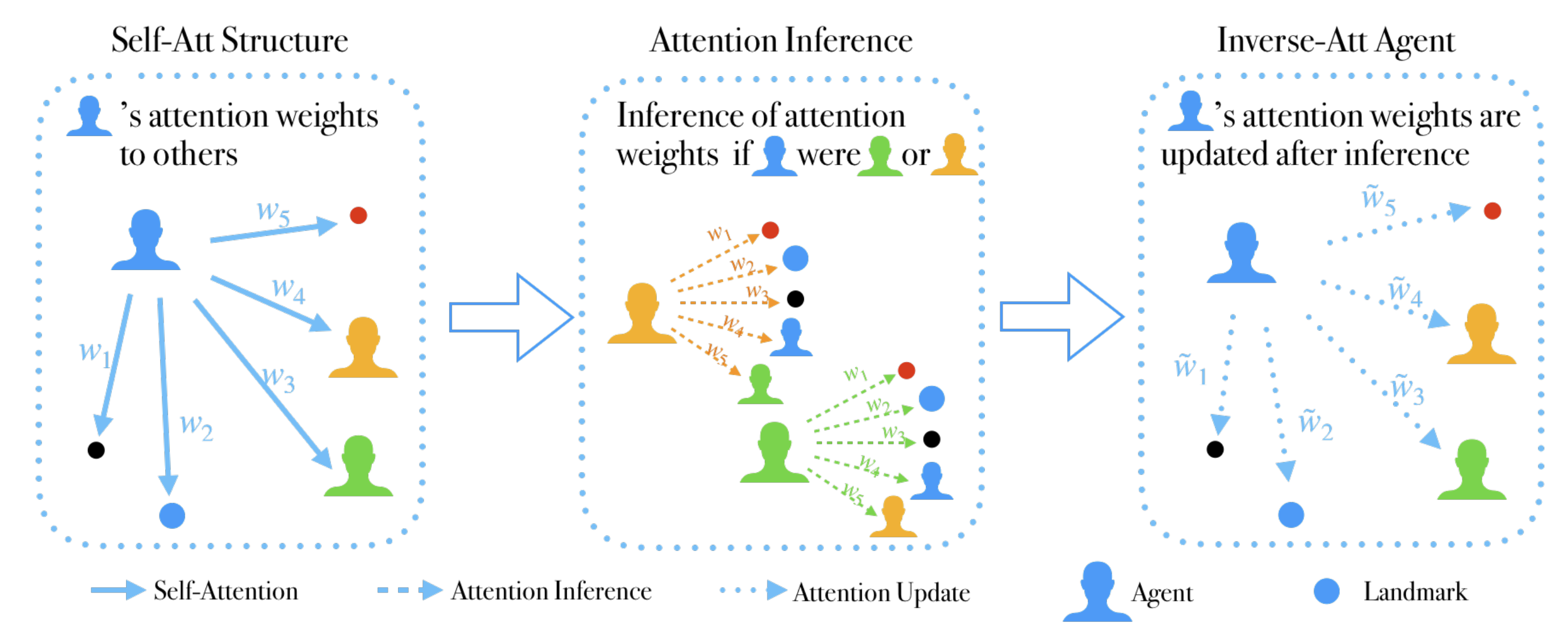

Inverse Attention Agent in Multi-Agent System

Qian Long*, Ruoyan Li*, Minglu Zhao*, Tao Gao, Demetri Terzopoulos,

Qian Long*, Ruoyan Li*, Minglu Zhao*, Tao Gao, Demetri Terzopoulos,

We introduce Inverse Attention Agents, leveraging Theory of Mind concepts through an attention mechanism to enable adaptability in dynamic multi-agent environments. These agents infer the goals and attentional states of other agents, refining their attention weights for improved decision-making. Tested across cooperative, competitive, and mixed tasks, our approach enhances performance and human-like cooperation compared to conventional models.

Cite Inverse Attention Agent in Multi-Agent System

@article{long2024inverse,

title={Inverse Attention Agent for Multi-Agent System},

author={Long, Qian and Li, Ruoyan and Zhao, Minglu and Gao, Tao and Terzopoulos, Demetri},

journal={International Conference on Learning Representations (ICLR)},

year={2025}

}

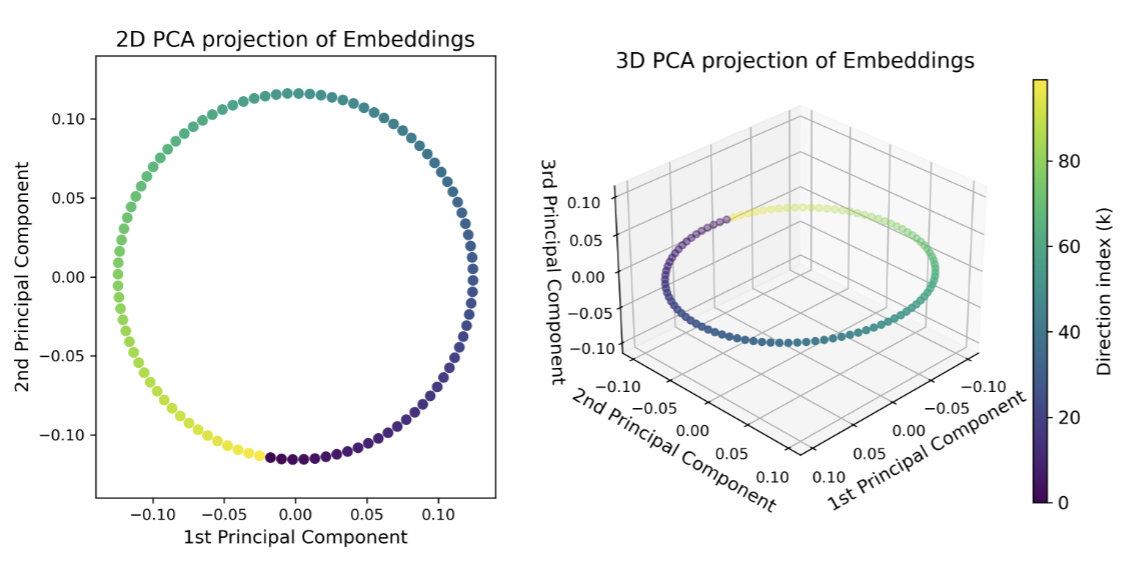

A Minimalistic Representation Model for Head Direction System

Minglu Zhao, Dehong Xu, Deqian Kong, Wen-Hao Zhang, Ying Nian Wu

Minglu Zhao, Dehong Xu, Deqian Kong, Wen-Hao Zhang, Ying Nian Wu

We propose a minimalistic representational model for the Head

Direction (HD) system, a crucial component of spatial navigation in mammals. Our model leverages the symmetry of the

rotation group U(1) and the inherent circular geometry of the

head direction. We develop fully connected and convolutional

versions of the model, both aiming to learn a high-dimensional

representation of head direction that captures essential properties of HD cells.

Cite A minimalistic representation model for head direction system

@inproceedings{zhao2025head,

title={A minimalistic representation model for head direction system},

author={Zhao, Minglu and Xu, Dehong and Kong, Deqian and Zhang, Wen-Hao and Wu, Ying Nian},

booktitle={Proceedings of the annual meeting of the cognitive science society},

year={2025}

}

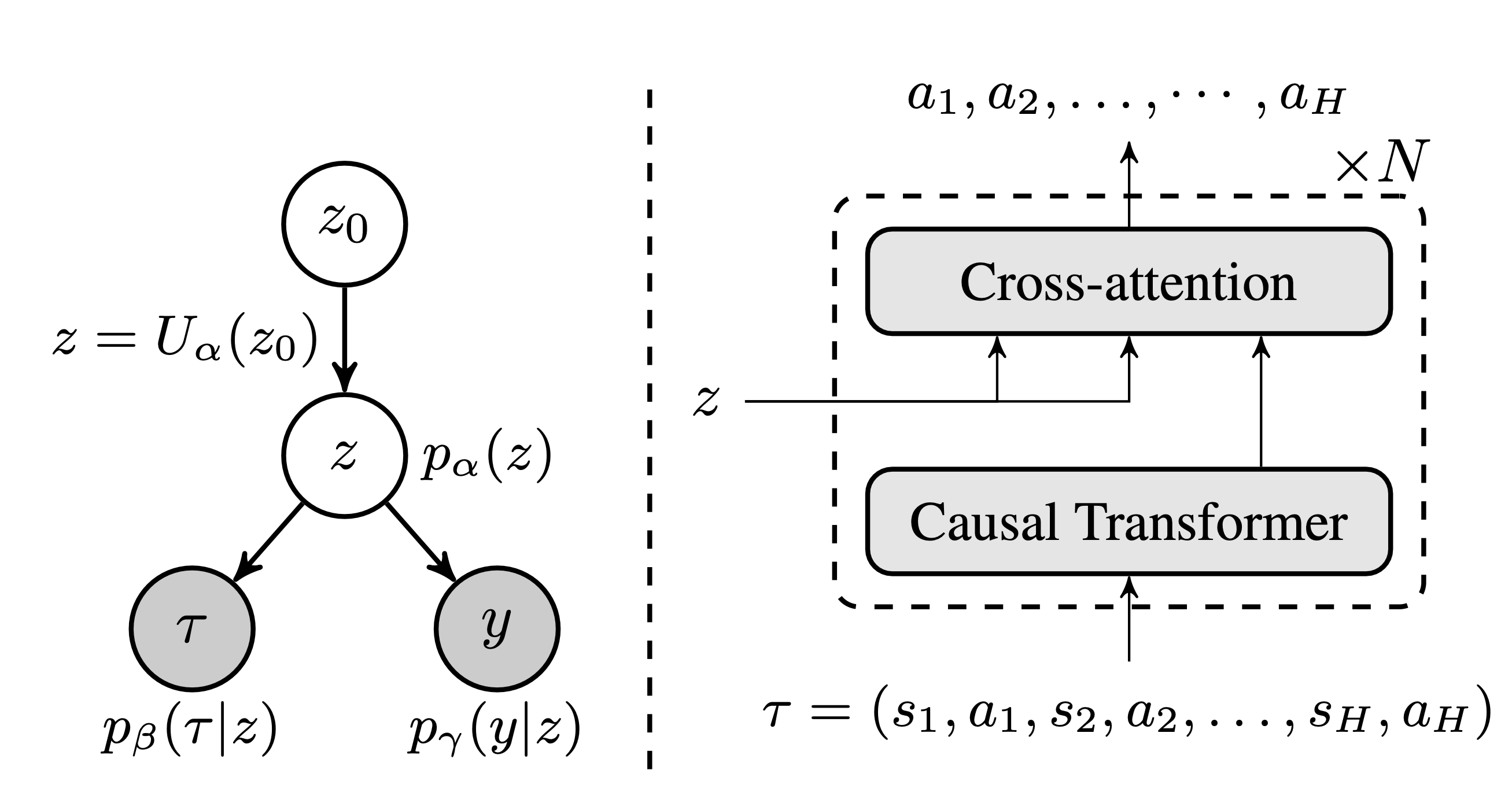

Latent Plan Transformer: Planning as Latent Variable Inference

Deqian Kong*, Dehong Xu*, Minglu Zhao*, Bo Pang, Jianwen Xie, Andrew Lizarraga, Yuhao Huang, Sirui Xie*, Ying Nian Wu

Deqian Kong*, Dehong Xu*, Minglu Zhao*, Bo Pang, Jianwen Xie, Andrew Lizarraga, Yuhao Huang, Sirui Xie*, Ying Nian Wu

We introduce the Latent Plan Transformer (LPT), a novel model that leverages a latent space to connect a Transformer-based trajectory generator and the final return. This architecture enables planning without step-wise rewards, addressing temporal consistency challenges in long-term tasks. LPT uses maximum likelihood estimation on trajectory-return pairs, with posterior sampling of latent variables for consistent sub-trajectory abstraction. During inference, LPT deduces the latent variable based on expected returns, realizing a planning-as-inference approach.

Cite Latent Plan Transformer: Planning as Latent Variable Inference

@article{kong2024latent,

title={Latent Plan Transformer for Trajectory Abstraction: Planning as Latent Space Inference},

author={Kong, Deqian and Xu, Dehong and Zhao, Minglu and Pang, Bo and Xie, Jianwen and Lizarraga, Andrew and Huang, Yuhao and Xie, Sirui and Wu, Ying Nian},

journal={Advances in Neural Information Processing Systems},

year={2024}

}

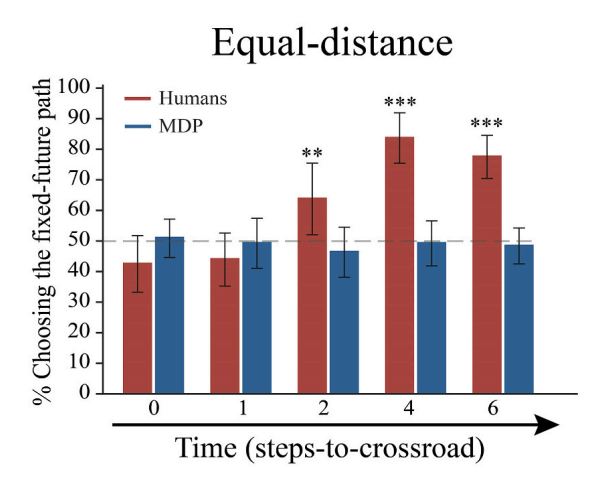

Intention beyond desire: Spontaneous intentional commitment regulates conflicting desires

Shaozhe Cheng, Minglu Zhao, Ning Tang, Yang Zhao, Jifan Zhou, Mowei Shen, Tao Gao,

Shaozhe Cheng, Minglu Zhao, Ning Tang, Yang Zhao, Jifan Zhou, Mowei Shen, Tao Gao,

In Cognition, 2023

We explore how coherent actions emerge from conflicting desires, contrasting classical desire-driven behavior with intention-driven action. Through 2D navigation games, we identify three unique markers of human intentional commitment—goal perseverance, self-binding, and temporal leap—that distinguish human actions from purely desire-driven agents. Our findings suggest that humans form committed intentions to manage conflicting desires, enhancing predictability and reducing computational load in action planning.

Cite Intention beyond desire: Spontaneous intentional commitment regulates conflicting desires

@article{cheng2023intention,

title={Intention beyond desire: Spontaneous intentional commitment regulates conflicting desires},

author={Cheng, Shaozhe and Zhao, Minglu and Tang, Ning and Zhao, Yang and Zhou, Jifan and Shen, Mowei and Gao, Tao},

journal={Cognition},

volume={238},

pages={105513},

year={2023},

publisher={Elsevier}

}

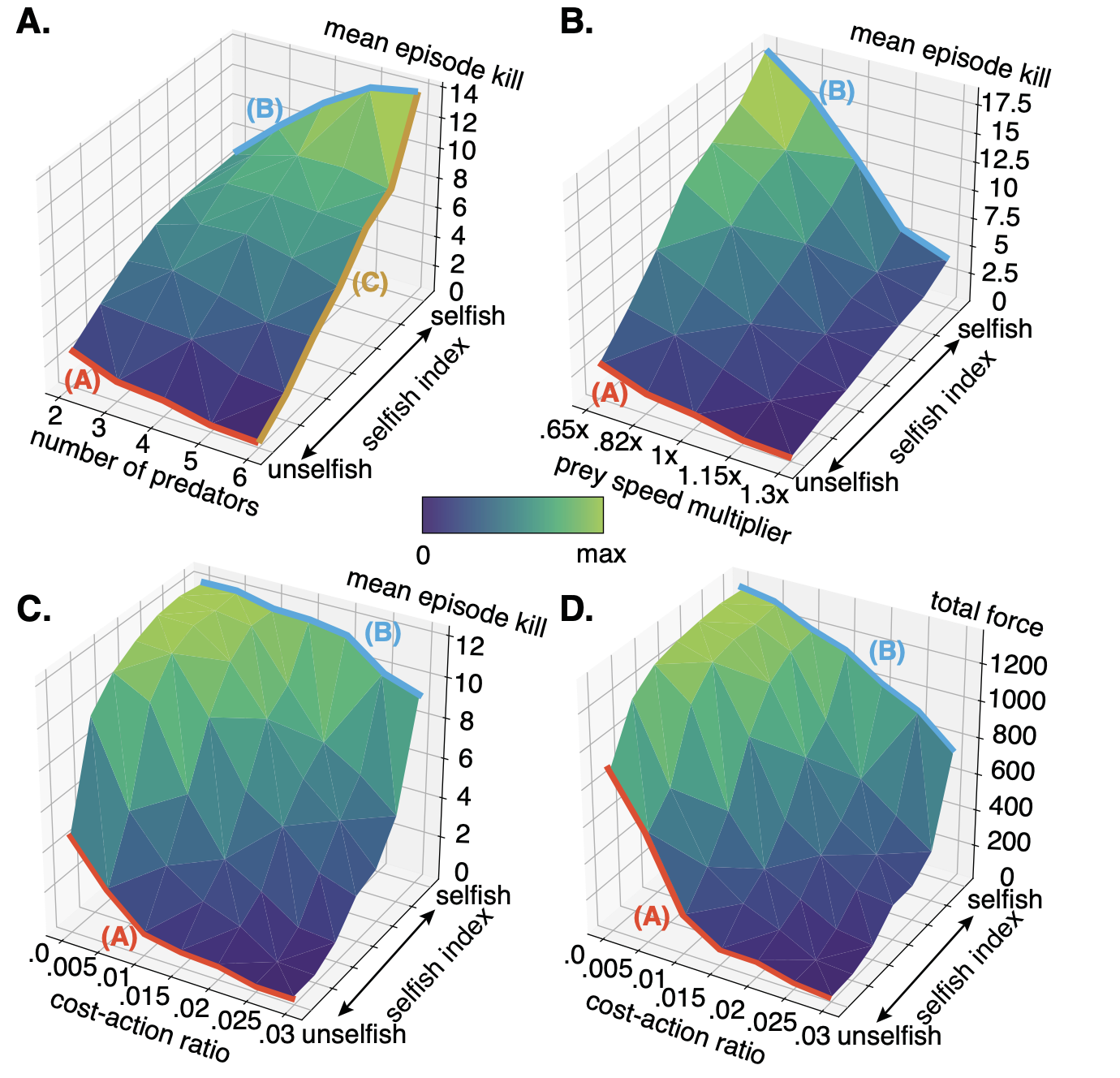

Sharing rewards undermines coordinated hunting

Minglu Zhao, Ning Tang, Annya L Dahmani, Yixin Zhu, Federico Rossano, Tao Gao,

Minglu Zhao, Ning Tang, Annya L Dahmani, Yixin Zhu, Federico Rossano, Tao Gao,

In Journal of Computational Biology, 2022

We investigate the impact of reward sharing on coordinated hunting using Multi-agent Reinforcement Learning (MARL) and reveal surprising findings: rather than facilitating coordination, sharing rewards undermines it due to issues like the free-rider problem and coordination limits at larger group sizes. Individually rewarded agents outperform those sharing rewards, particularly in challenging scenarios. Our results suggest that reward sharing may not be crucial for animal coordination, challenging assumptions in AI models that rely on shared rewards to motivate group coordination.

Cite Sharing rewards undermines coordinated hunting

@article{zhao2022sharing,

title={Sharing rewards undermines coordinated hunting},

author={Zhao, Minglu and Tang, Ning and Dahmani, Annya L and Zhu, Yixin and Rossano, Federico and Gao, Tao},

journal={Journal of Computational Biology},

volume={29},

number={9},

pages={1022--1030},

year={2022},

publisher={Mary Ann Liebert, Inc.}

}

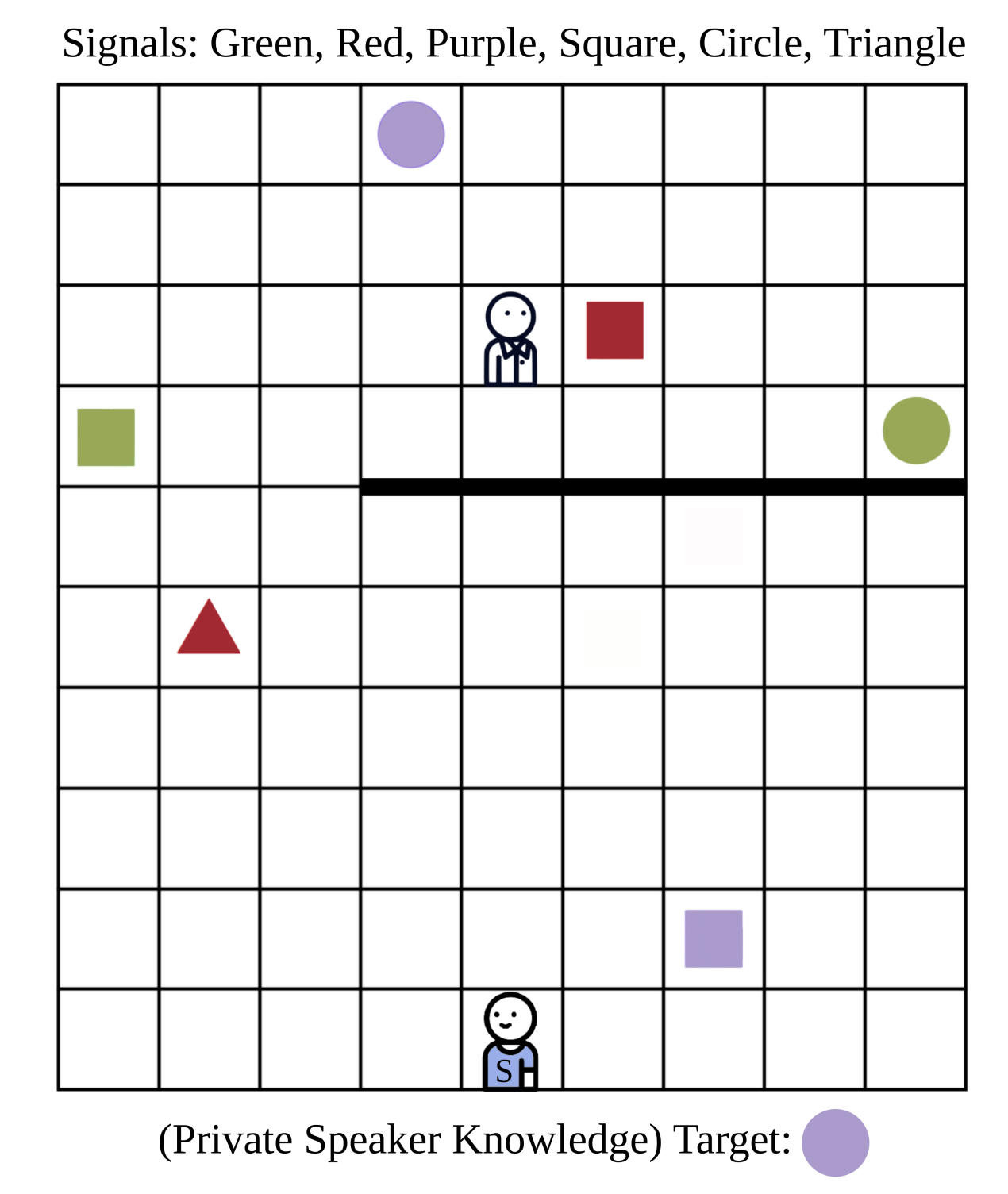

Modeling communication to coordinate perspectives in cooperation

Stephanie Stacy, Chenfei Li, Minglu Zhao, Yiling Yun, Qingyi Zhao, Max Kleiman-Weiner, Tao Gao,

Stephanie Stacy, Chenfei Li, Minglu Zhao, Yiling Yun, Qingyi Zhao, Max Kleiman-Weiner, Tao Gao,

We introduce the Imagined We for Communication framework, a model where agents leverage shared agency to interpret overloaded signals in ambiguous contexts. By simulating rational cooperators, our model demonstrates strong performance in high-ambiguity settings, even with minimal reasoning depth, underscoring how shared knowledge and cooperative logic support effective communication.

Cite Modeling communication to coordinate perspectives in cooperation

@inproceedings{stacy2021modeling,

title={Modeling communication to coordinate perspectives in cooperation},

author={Stacy, Stephanie and Li, Chenfei and Zhao, Minglu and Yun, Yiling and Zhao, Qingyi and Kleiman-Weiner, Max and Gao, Tao},

booktitle={Proceedings of the annual meeting of the cognitive science society},

year={2021}

}

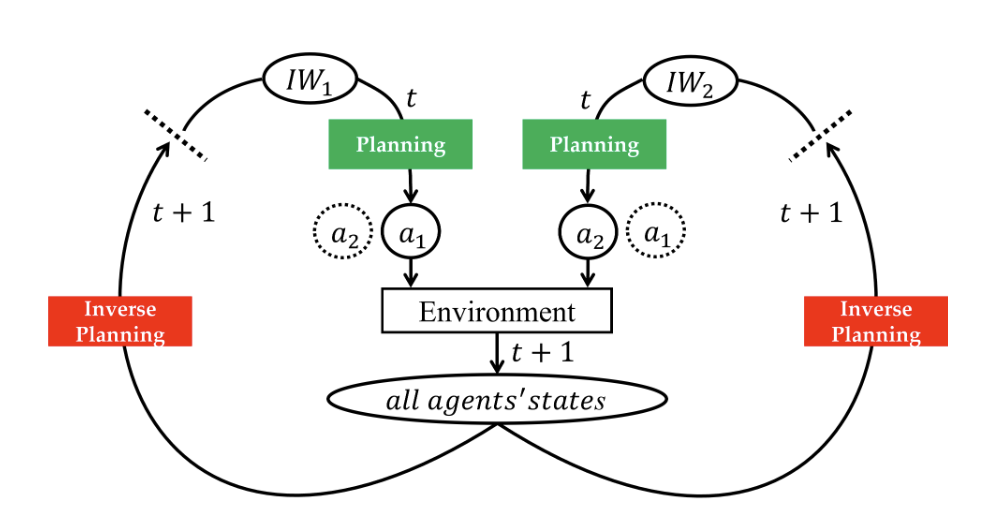

Bootstrapping an Imagined We for Cooperation

Ning Tang, Stephanie Stacy, Minglu Zhao, Gabriel Marquez, Tao Gao,

Ning Tang, Stephanie Stacy, Minglu Zhao, Gabriel Marquez, Tao Gao,

We develop a Bayesian-Theory-of-mind-based framework named the Imagined We (IW), showing how agents can reliably converge on a joint intention in uncertain, multi-choice settings through bootstrapping. In a real-time cooperative hunting task, our model proves resilient to challenges like numerous choices, approximate partner models, and noisy perceptions, highlighting its robustness in maintaining joint commitment under imperfect conditions.

Cite Bootstrapping an Imagined We for Cooperation

@inproceedings{tang2020bootstrapping,

title={Bootstrapping an Imagined We for Cooperation},

author={Tang, Ning and Stacy, Stephanie and Zhao, Minglu and Marquez, Gabriel and Gao, Tao},

booktitle={Proceedings of the annual meeting of the cognitive science society},

year={2020}

}

Teaching

- STATS 10 Introduction to Statistical Reasoning.

- STATS 20 Introduction to Statistical Programming with R

- STATS 21 Python and Other Technologies for Data Science

- STATS 100A Introduction to Probability

- STATS 101A Introduction to Data Analysis and Regression

- STATS 102C Introduction to Monte Carlo Methods